Time for my first yearly post series! As last year, I want to do recap of Google I/O from both perspectives: user and developer. Let’s start with user.

This time I make separate posts for Android Q and everything else (including Android older than Q). This is about everything else. I want to keep my posts short - I don’t like long posts, do you?

As always, there were so many announcements, it would make for very boring and ill-structured post. So I chose those that are the most interesting. If you want to see them revealed (or you don’t trust me), every section title is also link to that particular moment during the keynote. You’re welcome!

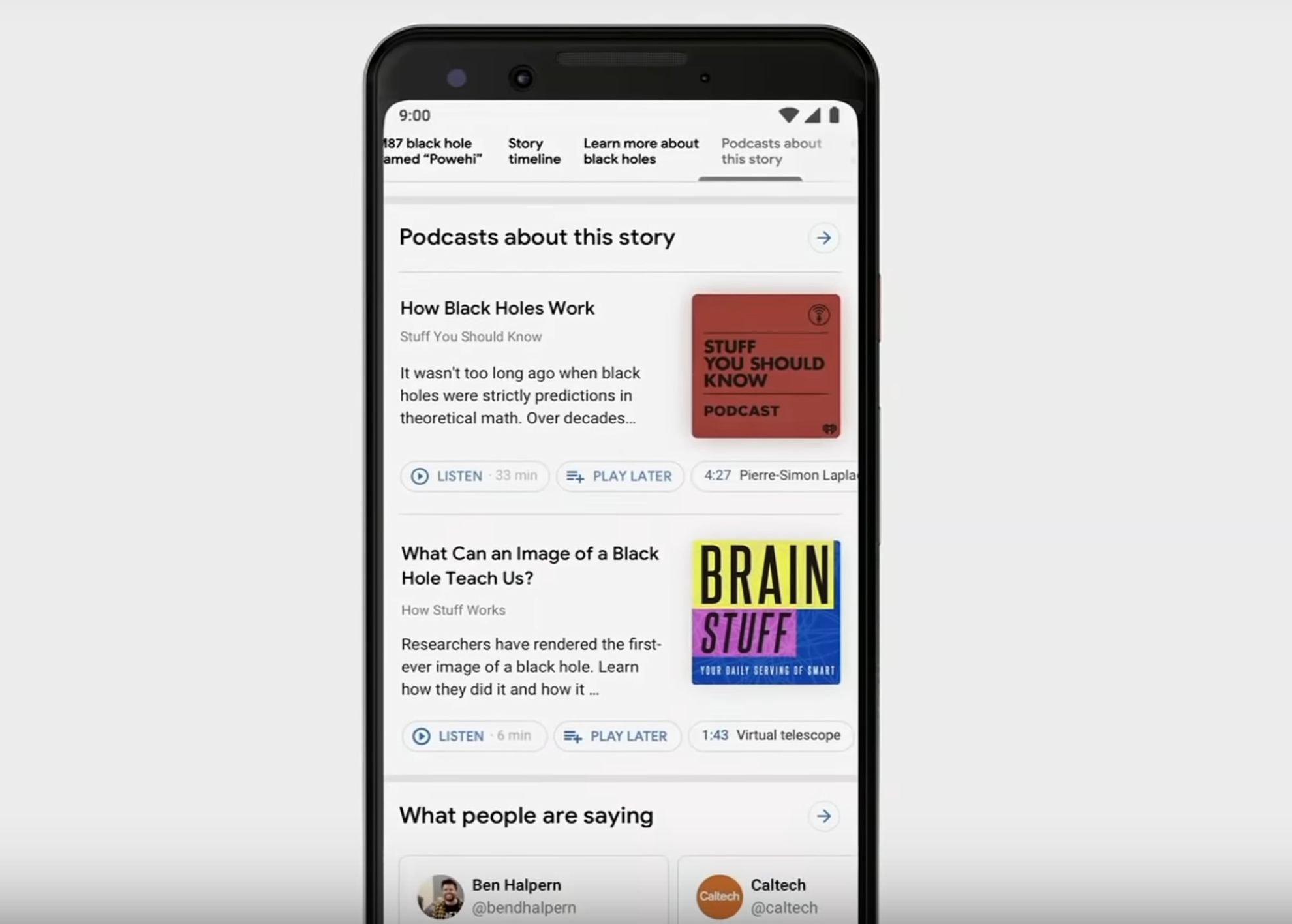

Podcasts in search results

This may sound only a bit interesting - after all, search results are getting richer every year. But Sundar Pichai also said “By indexing podcasts we can surface relevant episodes based on their content, not just the title”. I understand that now Google will… analyze podcast content - AI will listen to it, make transcription and that transcription would influence search results? That would be cool, but it’s not so clear for now. It would play very nice along all other speech-recognition-related announcements, so that would make sense.

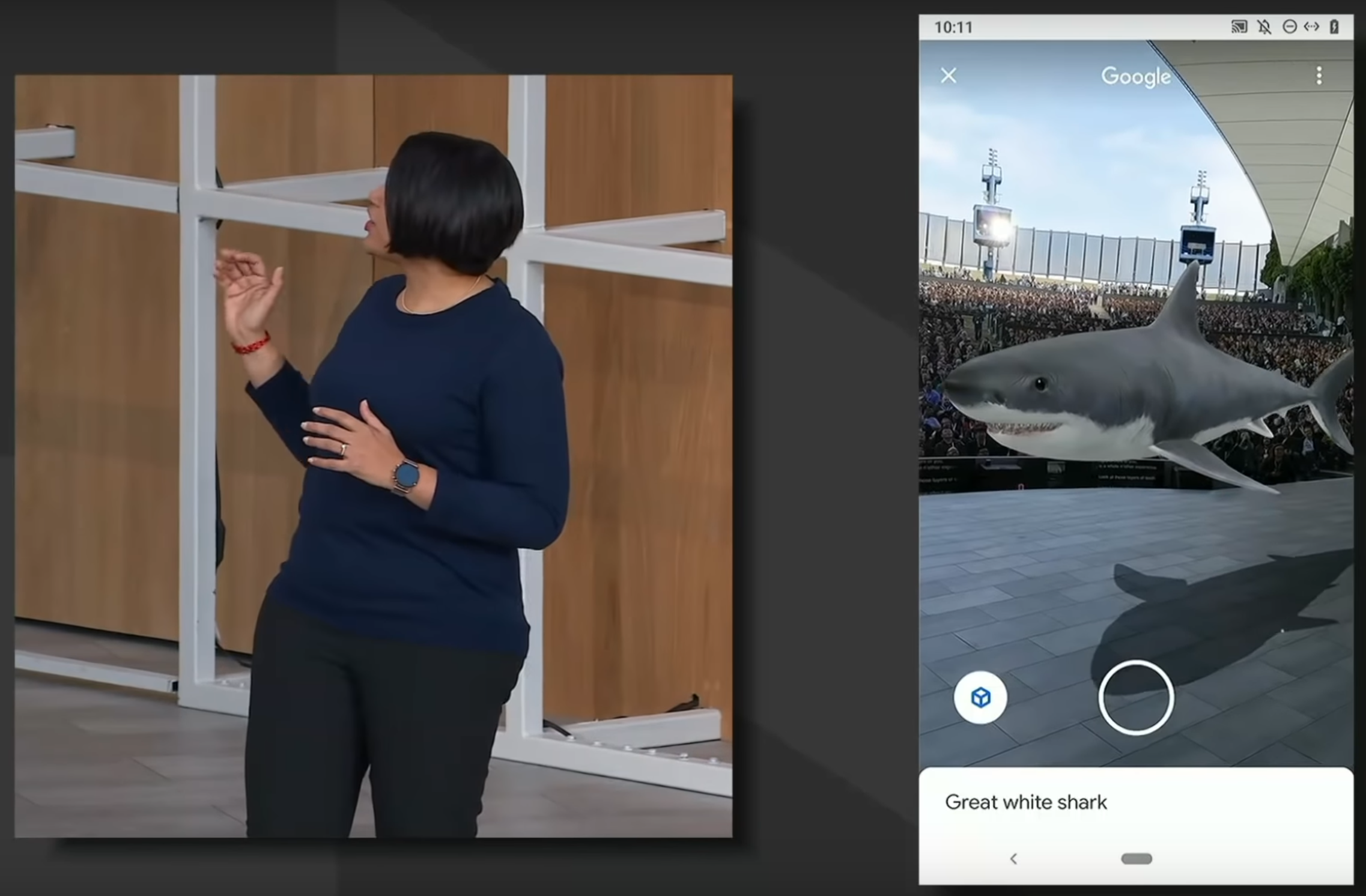

AR in search results

AR was supposed to be the next big thing in technology, but we are not there yet. Google gives it another try with search results. Searching for some muscle might give us 3D model of that muscle that we can inspect on the screen or even place it on our own desk - AR style. More impressive was placing great white shark on stage and seeing how big it is in reality, comparing to world around. Cool! I will probably use it once and forget, but it’s cool! In future it will also work with placing clothes or furniture in our home - to see if they match well.

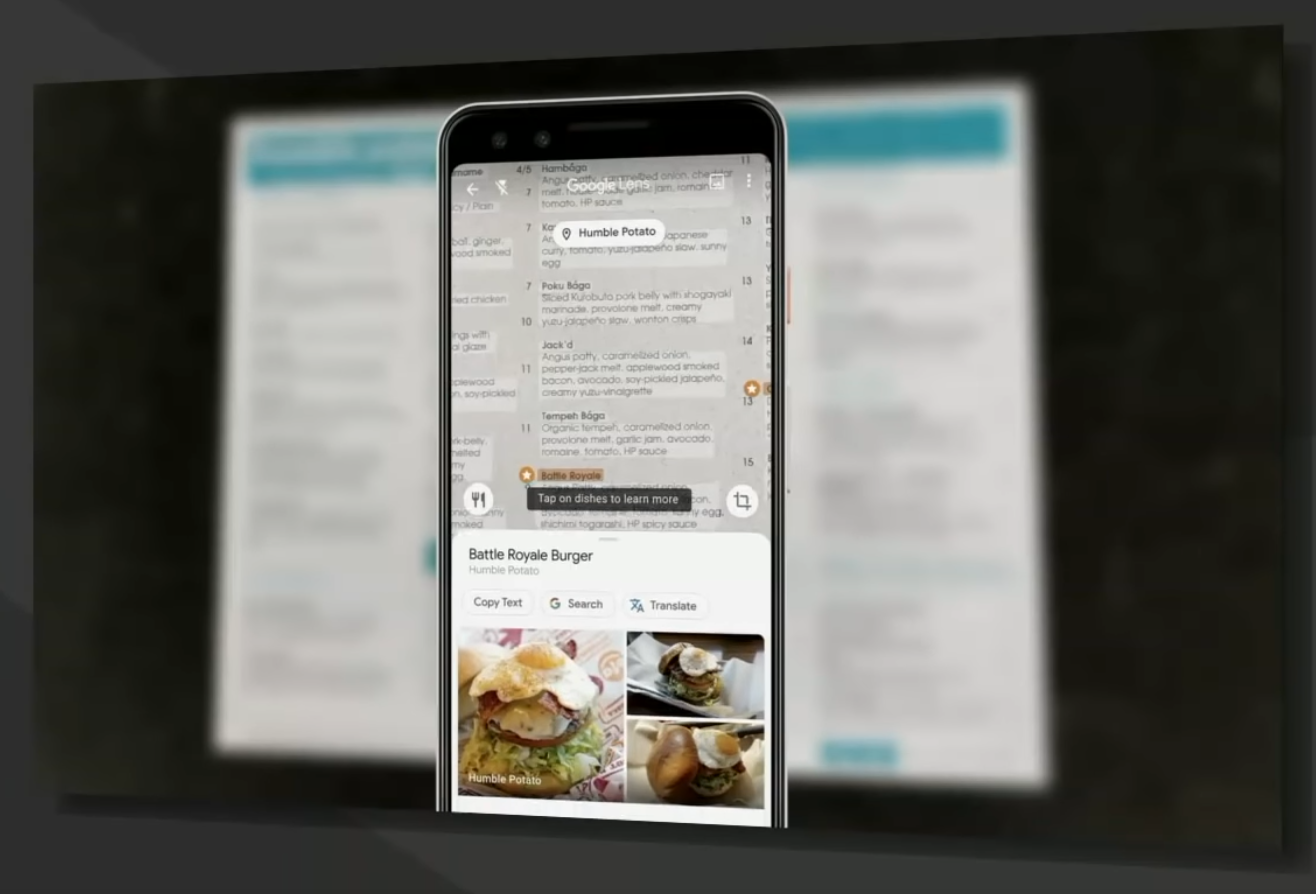

Google Lens with restaurant menu!

That would be so useful! I can’t count how many times I was sitting at the restaurant and having no idea what to order, because I had no idea what these dishes are like. Ingredients don’t do justice here. Google Lens should help with that - scanning menu with camera would show review and PICTURES for that particular item you are pointing to. It’s so awesome thing and so awesomely hard technologically, I’m not sure even Google has so much data to make it happen.

Google Lens with translation and reading out loud

In-picture translation is well known by now - just use Google Translate. But now it’s coming to Google Lens and as a bonus we get reading out loud - be it translated or original text. Why is it supposedly big thing? Because it will help two excluded groups: illiterates and people with impaired vision. Can’t read something? Just let it be read by your smartphone. Making world more accessible for everyone is always noble. I’m just wondering how illiterate person can use their smartphone if they can’t read what is displayed on the screen?

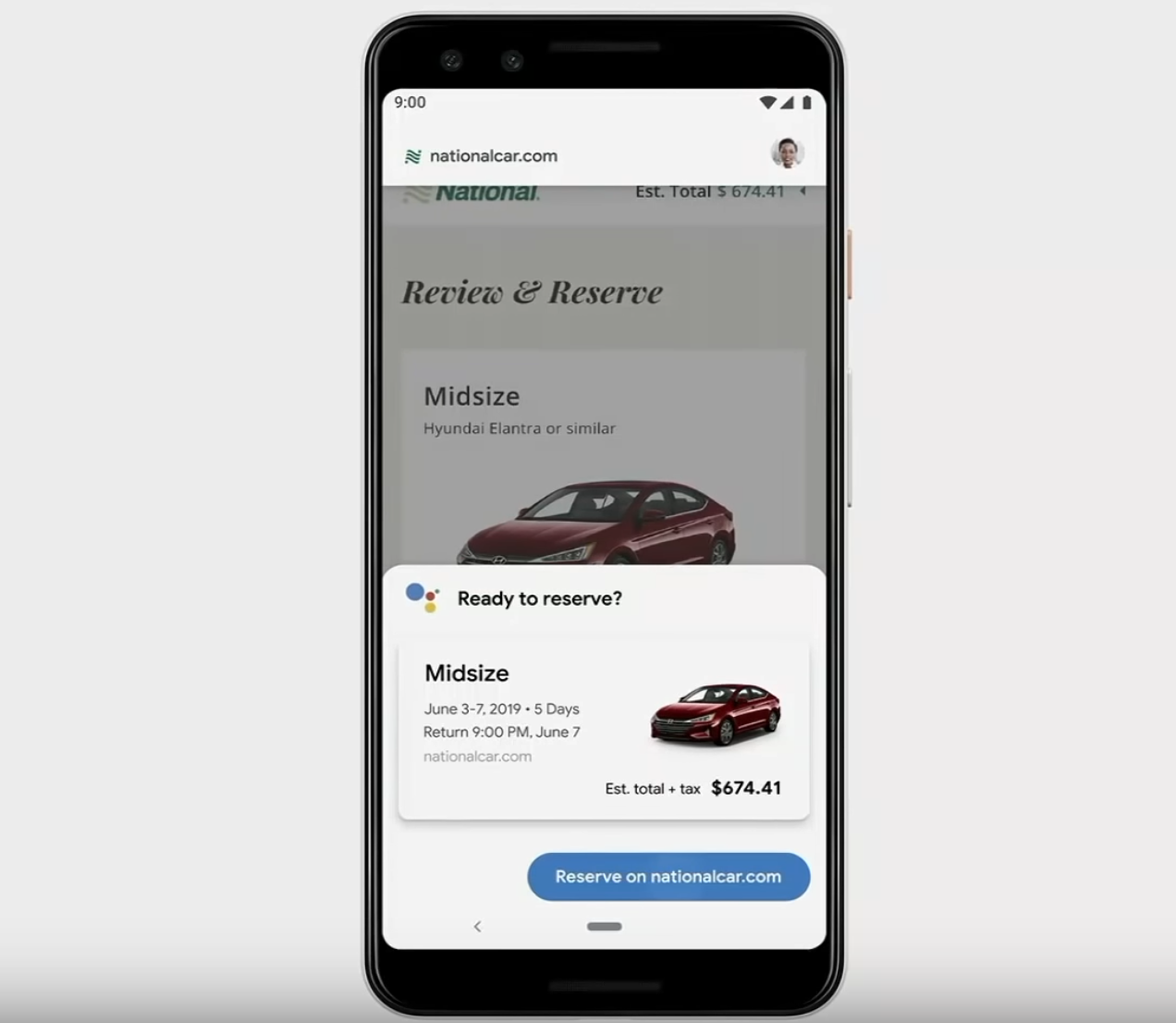

Google Duplex goes to web

Last year Google Duplex was great revelation. It showed how advanced is Google AI, its speech recognition and language understanding. It’s time for next step - web. Not as revolutionary, but cool nonetheless. Google Assistant should be able to do work on our behalf in web. Example on stage was really good - going trough form of car rental. No one likes forms on the web, especially on mobile, they are terrible. But they are necessity.

Automatic going through whole form by Assistant would be awesome. What’s important is that website doesn’t need to be optimized at all. Assistant uses AI to know which field should be filled with that kind of information. I’m just afraid that web - especially web forms - is too complex and it will work only on popular websites. That is a big challenge but I keep fingers crossed.

Driving mode

Activated with “Ok Google, let’s drive”, new screen is dashboard with only important stuff. It should not distract you while driving, while still providing some essential information. Of course it is backed by AI and gives personalized suggestions for your destination, music and so on.

Google Maps - Incognito mode

Incognito mode in browsers is very handy (get it?). But seriously, apart from porn it has many valid use cases. Incognito mode in Maps? Not so obvious.

Of course, if you don’t want to be tracked at all, that’s cool. Apart from that you might want to organize party for your loved one but you don’t want your place search history to spoil the surprise. Maybe you go to some doctor and you don’t want it to be shown when you are using Maps with your friends. There are some valid scenarios, but I think its use is limited.

More privacy

This matter was touched many times during the keynote. No surprise - it is hot topic. And Google wants to break the label of “tracking your every move”. In many places (especially search), deleting your data is easier than ever. You can even do “rolling delete” so keeping only your last 3 months of personal data. Everything older - deleted.

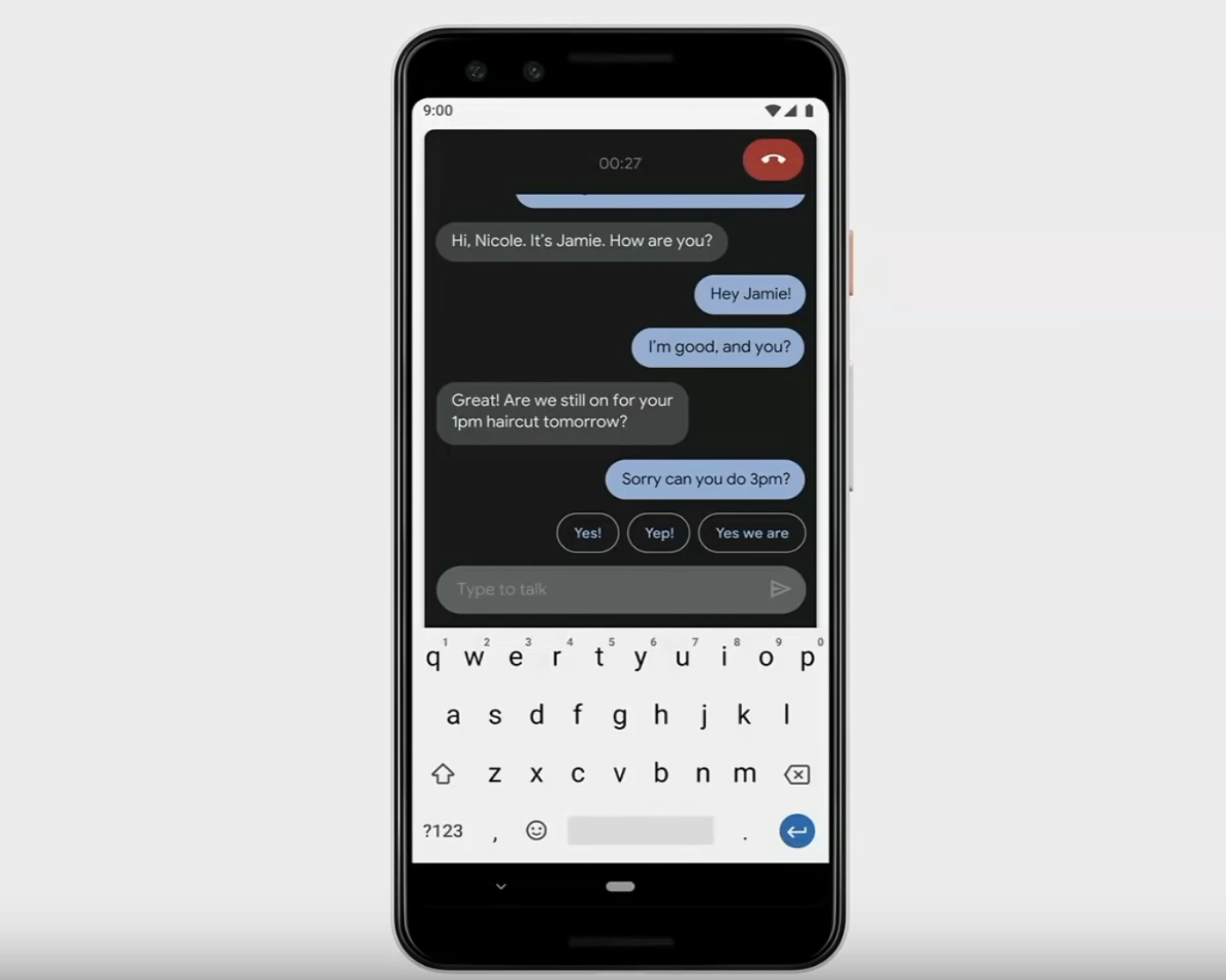

More accessibility

First thing is targeted to those whore are not able to speak - accessible phone calls called “Live Relay”. The person on the other side of call speaks normally, but on the other end impaired person might just type and it will be read out loud. Again - not for me, but for some people, life-changing.

For people with speech disorder, another novelty is improvement in speech recognition. Until now model was trained only on healthy people but in the future should work for everyone even for those speaking not-so-clearly. Project Euphonia goes one step further and wants to make speech accessible to everyone, no matter their level of disability. I’m all for making lives easier.

Android Q, duh!

Of course there is Android Q coming. But there are so many changes I decided to make into another article.

Interstingly, Android Q Beta is available for 21 devices from 13 manufacturers. That’s great, because it means you have better chance of having newest Android on your phone.

Google Home is now Google Nest

I don’t want to go too much into details, but Google Home is rebranding as Google Nest and accordingly Google Home Hub is rebranded as Nest Hub. Google presented new, improved, more expensive version of it - Nest Hub Max. It has 10-inch display, camera, managing your smart home etc. Just read more here. Cool features are: Face match, muting music only by raising hand, stopping alarm with simple “Stop”.

Google Pixel 3A and 3A XL

![]()

Finally there is a phone with great camera that is not costing $1000 at start. I was really waiting for it. It looks like coming back to good old Nexus 4 and Nexus 5 days. Camera has night sight, portrait mode and super-res zoom so functionality is on-par to Pixels 3. There is also adaptive battery that should optimize energy usage using AI - but who doesn’t do that by now?

In short, it should be very good device with very good price. I couldn’t ask for more.

Summary

There was no revolution. No Google Duplex kind of announcement. It’s clear that in most areas there are more iterations than big leaps forward. Products are becoming more mature, Google cares more about privacy. And now, as the product is mature it can be also accessible, which is always a win in my book!

There are very cool things being done but this year’s Google I/O didn’t leave me with my jaw on the floor.